Welcome to the Container-based High Performance Computing Center!

In the following, you find information on the Container-based High Performance Computing Center (CBRZ) at HSU which is administrated by the chair for high performance computing.

If you have any questions, feel free to contact info-cbrz@hsu-hh.de.

For Newbies

- Which compute systems are available at CBRZ?

Currently, the CBRZ hosts- the supercomputer HSUper, which features O(580) compute nodes for compute- and data-intensive workloads. HSUper is currently ranked 339 in the TOP500 list (June 2022).

- the interactive scientific computing cloud (ISCC). The ISCC aims to fill the gap for users with specific needs that cannot be containerized as well as users with workloads that cannot be satisfied by existing local machines and do not yet require the entire power of HSUper.

- How can I get access to the computational resources?

- If you are a member of HSU: Please apply for HSUper access using the following form (available on campus/VPN only, login with RZ credentials) or this form for the ISCC. Or navigate the ticket system website to find further forms: Ticket Templates » CBRZ » CBRZ: HSUper Access Registration or CBRZ: ISCC Resources & Administration Access Registration

- If you are not a member of HSU and if you are linked to HSU through a collaborative project with HSU partners: please file a guest account form together with the respective HSU partner in charge. Afterwards, apply for HPC access (same workflow from here as for HSU members).

- In all other cases: please contact the CBRZ team to check for HPC access possibilities.

For Users

More information on computational resources:

More information on HPC competences (workshops, tutorials, material, etc.) can be found on the websites of the HPC Portal, established by the project hpc.bw funded by dtec.bw – Digitalization and Technology Research Center of the Bundeswehr. dtec.bw is funded by the European Union – NextGenerationEU.

CBRZ Infrastructure

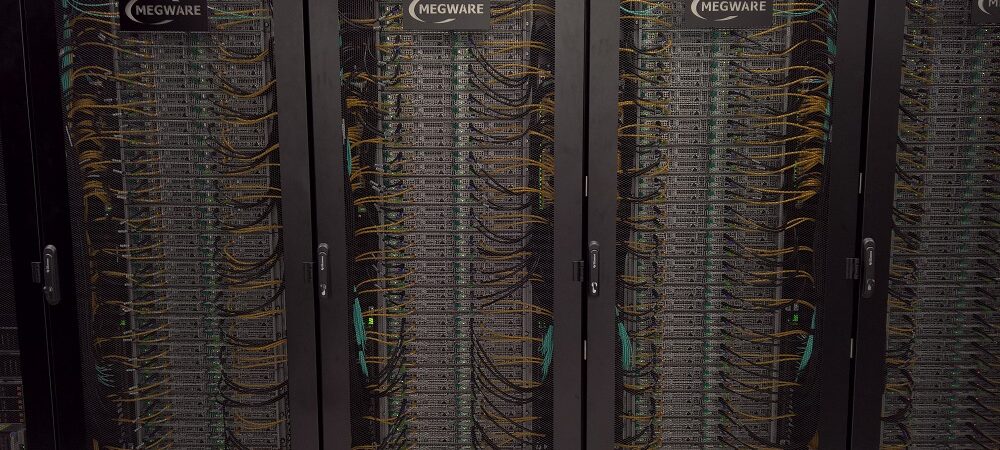

HSUper is located in 4 x 20″ containers. 9 racks currently contain:

- 571 liquid-cooled computing nodes,

- 5 fat-memory (1TB) liquid-cooled computing nodes,

- 5 GPU nodes (two NVIDIA Tesla A100 PCIe 40GB HBM2 per node),

- 4 GPU nodes (8 NVIDIA L40S per node)

- 1 PB BeeGFS storage,

- 1 PB CEPH storage,

- networking & uninterruptible power supply hardware.

One rack contains up to 92 computing nodes and 5 switches.

Each node consists of:

- 2 (dual-socket) Intel Xeon Scalable Platinum 8360Y, 36 Core @ 2.40 GHz

- 256 GB RAM (fat & L40S GPU nodes: 1TB)

- NVIDIA InfiniBand HDR100 (non-blocking)

A heat pump system enables sustainable reuse of hot liquids for building heating.

The ISCC cluster consists of 13 hosts in total, with the same hardware specifications as HSUper, except for the interconnect, which is capable of 50Gb/s over Ethernet instead of InfiniBand HDR100.

- Regular hosts: 10 hosts each equipped with 256 GB RAM and 2 Intel Icelake sockets; each socket features a Intel(R) Xeon (R) Platinum 8360Y processor with 32 cores, yielding a total of 64 cores per host.

- GPU hosts:

- 2 hosts each equipped with 1 TB RAM, 2 Intel Icelake sockets, 8 NVIDIA A30 (24GB) GPUs and 2TB local scratch storage; each socket features a Intel(R) Xeon (R) Platinum 8360Y processor with 32 cores, yielding a total of 64 cores per host.

- 1 host equipped with 2 TB RAM, 2 Intel Icelake sockets, 5 NVIDIA L40S (48GB) GPUs and 2TB local scratch storage; each socket features a Intel(R) Xeon (R) Platinum 8360Y processor with 32 cores, yielding a total of 64 cores per host.

HPC-related Projects

In the following, you find a list of projects that are related to CBRZ and its computational resources:

- hpc.bw: Competence Platform for Software Efficiency and Supercomputing (Prof. Neumann, supported by dtec.bw)

- MaST: Macro/Micro-Simulation of Phase Decomposition in the Transcritical Regime (Prof. Neumann, supported by dtec.bw)

- SuMo: Sustainability for Molecular Simulation in Process Engineering (Prof. Neumann, supported by DFG)

Letzte Änderung: 30. December 2024